Homelab Introduction¶

In this blog I'll explain how I've setup my homelab, what I use it for and how I manage it.

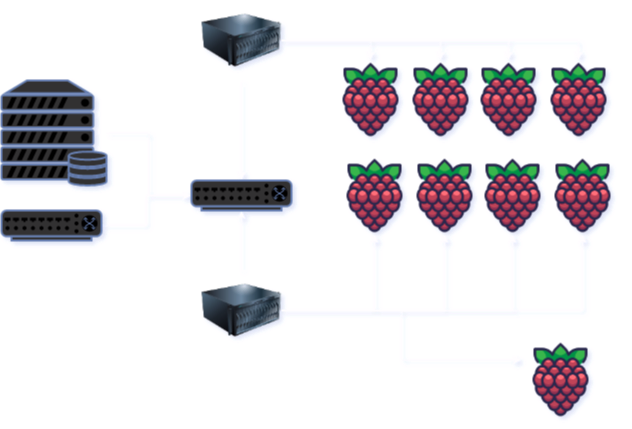

The homelab is a collection of Raspberry Pi's, a Western Digital NAS, some network switches, a Fritz Box, and two Asus Mini PCs.

The homelab is primary used for explorig and learning new technologies, such as Kubernetes, GitOps, DevSecOps, and self-hosting my own applications.

Inventory¶

So, what do we have?

- 1x Western Digital NAS

- 1x Fritz Box (Router, providing DHCP, DNS, and Internet access)

- 2x 8 port Netgear switch

- 1x 16 port Netgear switch

- 2x Asus Mini PC

- 8x Raspberry Pi 4

- 1x Raspberry Pi 5

The NAS is used to provide NFS storage to the Kubernetes cluster.

Machines¶

The machine setup is as follows:

One of the Mini PCs has five Raspberry PI's connected to it, as it includes the sole Pi 5 so far.

| Machine | Type | Notable Software | Kubernetes Role | Memory | Cores | Storage |

|---|---|---|---|---|---|---|

| Mandarin | Mini PC | Harbor, Portainer | Control Plane | 16GB | 8 | 1TB SSD |

| Grape | Mini PC | CoreDNS,Keycloak | Control Plane | 16GB | 8 | 1TB SSD |

| R-Pi4(5x) | R-Pi | N/A | Agent | 4GB | 4 | 128GB USB |

| R-Pi4(3x) | R-Pi | N/A | Agent | 8GB | 4 | 128GB USB |

| R-Pi5(1x) | R-Pi | N/A | Agent | 8GB | 4 | 128GB USB |

The MiniPCs are both a Control Plane node and use Docker Compose to run software that should exist outside of the Kubernetes clusters.

Kubernetes Clusters¶

To learn more about managing a multi-cluster Kubernetes setup, I've setup two clusters:

- Grape + 4x R-Pi4 + 1x R-Pi5

- Mandarin + 4x R-Pi4

Harbor is used as internal registry and as a Docker Hub proxy. Portainer is used to manage the Docker Compose setup on the Mini PCs. The K3S setup refers to the Harbor as a Dockerhub Proxy, so that it can pull images from Docker Hub without running into rate limits.

To avoid Kubernetes being blind about the Docker Compose applications, I've setup K3S on those machines to use the Docker Engine as container runtime, rather than containerd.

Keycloak is used as an identity provider for the Kubernetes clusters, and for some of the applications running on the clusters.

The seperately mentioned CoreDNS is not part of the clusters, but is used to provide DNS for the homelab. The machine names are managed by the Fritz Box.

Currently I manually manage the CoreDNS configuration, but I'm looking into building an automation for this. This is a learning exercise, so I'm not looking for a ready made solution, but rather to build something myself.

As Kubernetes solution, I use K3S, which is a lightweight Kubernetes distribution. For networking, I use Cilium, which is a CNI plugin that provides eBPF based networking. For Service Mesh I use Istio, as it is compatible with Cilium.

For Load Balancing, I use MetalLB, which is a Load Balancer implementation for bare metal Kubernetes clusters. This is to be replaced by the Cilium Load Balancer, which is currently in Alpha.

Base Cluster Setup¶

The base setup of the clusters is as follows:

- K3S as Kubernetes distribution

- Cilium as CNI, and provide cross cluster networking

- MetalLB as Load Balancer

- Istio as Service Mesh/Ingress Gateway

- Cert Manager as Certificate Manager, managing my own CA and certificates

- Flux as GitOps operator, to manage the cluster configuration

- ArgoCD as GitOps operator, to manage the application configuration

- Tekton as CI/CD solution

- Prometheus for collecting Metrics

- Grafana for visualizing Metrics

- Grafana Tempo for storing and processing Traces

- OpenTelemetry for collecting Traces, and send them to Tempo

- Gitstafette for relaying GitHub webhooks to the clusters, as the clusters are not directly accessible from the Internet

Next¶

In later posts, I'll dive into more details on how how the clusters are configured.